This is Why I Went to Medical School

Like many radiologists, I often find myself complaining about my workflow and saying “this isn’t why I went to med school” or “I didn’t go…

Like many radiologists, I often find myself complaining about my workflow and saying “this isn’t why I went to med school” or “I didn’t go to med school to do X”. I thought it would make a nice change to say exactly why I did go to med school, and what I hope the radiology profession will become with the aid of technology.

In medical school, I was motivated by the idea that the radiologist was in a unique position to investigate and solve problems. We would be the first to see the all-important visual evidence, and it would be up to us to provide a salient analysis of what it all meant. This is what I’m supposed to do today, but in reality, there is so much that prevents me from being the radiologist I want to be.

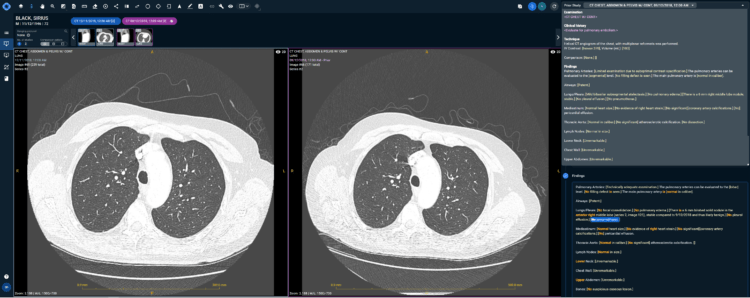

What I want from the radiology profession is quite simple: I want to discuss my cases in the same way a doctor would talk to a patient. Of course, the patient isn’t in the room, but I want the freedom to be able to say what I see in real-time, in the order that I want to say it in a cohesive manner, without interruptions to my train of investigative thought.

Eyes on the prize

Anything that distracts me from reading an image has the potential to reduce the quality of my work. The incessant disruption to flow makes it very easy to forget important information, so reading a case becomes more than a matter of medical competency – it’s also a test of short-term memory.

I’m certainly not the only rad who feels I’m being set up to fail because of all the memory traps I face on a daily basis. Because I am generally confined by the order of a report template, I often can’t dictate something at the moment it first catches my eye. I have to store this in the back of my mind while I scroll through more slices, dictating as I go, and then recall my stored finding when I reach the right section in the template.

Structured reporting without structured input

I want to freely dictate my own words but have them fit into a template, without having to take my eyes off the viewer screen. No pausing to think, ‘is this in the right level of the report?’ and no checking my other monitor to see whether the report is populating in the way that I want it to. I just want to dictate the patient’s findings in a normal everyday talking flow, and I want the system to adapt it to whichever format is most useful to the recipient, typically a referring physician or patient.

A prior future

The ability to compare prior imaging (and prior reports) is the other fundamental process that contributes to my ultimate judgement as a radiologist, and just like the viewer-reporter axis, my flow is constantly interrupted.

A very common ‘memory trap’ I face here is when I have to dictate the prior study description and date I am using for comparison. This type of menial administrative task should not be necessary in this day and age and is fraught with potential error. I’ve gotten confused calls from referring physicians because I accidentally said the wrong year. Why can’t details of the prior I’m comparing automatically populate in the comparisons section of the study?

I also want to be able to search prior reports for findings that have been already reported, unbeknownst to me. If I see a 2.5 cm mass in the right kidney for example, I have to painstakingly scroll through priors to find a previous instance of the finding in order to make a more informed decision regarding its characteristics, i.e. benign or potentially malignant. It would be so much easier if the word ‘mass’ or synonyms such as ‘lesion’ could be tagged to the right kidney, or even just the ‘KIDNEY’ section of the template. This would definitely reduce misses and/or unnecessary follow-up studies.

Why Unification is key

The separation of the viewer and reporter functions is the main cause of the ‘memory traps’ I keep referring to. It is basically my responsibility to keep them in sync, but it is a responsibility I wish I didn’t have. A communication standard between the viewer and reporter would not solve the entirety of the problem. For example, in the case of the prior study case date: how would the reporter know which study date to pull from all of the various prior studies?

This requires a unified system with contextual understanding to know when I’m reviewing the current study, when I’m reviewing a prior study (and which one), etc. Likewise, to allow for free dictation, the system would need to understand which part of the anatomy I’m reviewing at any given moment to ensure the dictation goes into the correct sections of the report.

In simple terms, automated contextual understanding between the different elements of the radiologist workflow will spare me the clicking, dragging and remembering I currently need to do in order to keep the machine running. With the menial taken care of, I will have more freedom to exercise medical judgement. That’s why we all went to med school, right?